Last night was the Season of AI presentation. We started with Bill Wilder presenting the fundamentals of Generative AI and quick introduction to Azure AI Studio, then I finished up with a .NET code walkthrough implement Retrieval Augmented Generation (RAG) using Semantic Kernel.

It was nice to see a lot of regular faces and meet several new people.

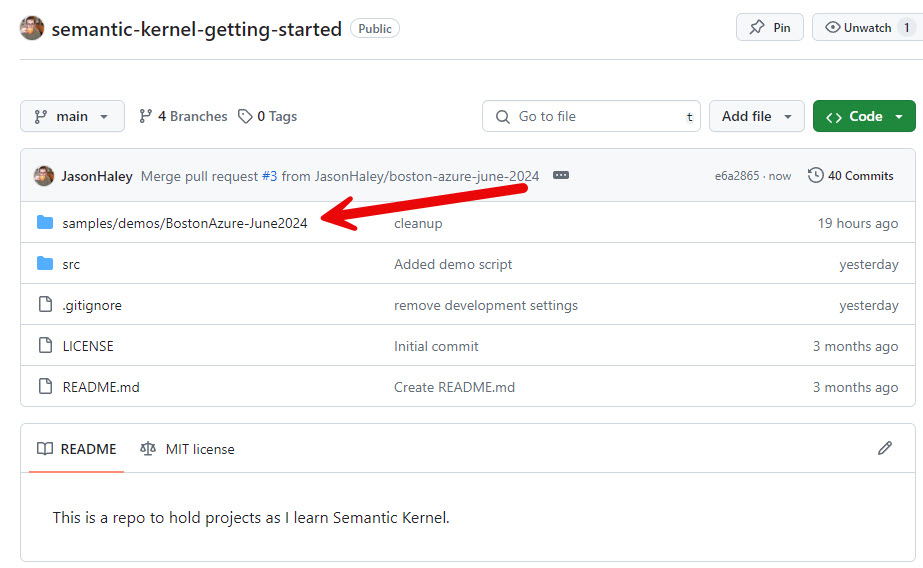

Demo Code

The demo code is on my GitHub repo BostonAzure-June2024 under a subdirectory.

The code is setup as the beginning of the demo (ie. simple echo client/api implementation), you’ll find the steps I used to progressively create the demo in the demo-script.md file.

Since I ran of time to do the last “bonus step”, you’ll find it at the end of that script along with the full content of the final code (shown below):

using Microsoft.Extensions.AI;

using Microsoft.ML.Tokenizers;

using Microsoft.SemanticKernel;

using Microsoft.SemanticKernel.Connectors.InMemory;

using Microsoft.SemanticKernel.Data;

using Microsoft.SemanticKernel.Text;

using System.Text;

var builder = WebApplication.CreateBuilder(args);

//builder.Services.AddKernel()

// .AddOpenAIChatCompletion("gpt-4o", builder.Configuration["AI:OpenAI:ApiKey"])

// .AddOpenAIEmbeddingGenerator("text-embedding-ada-002", builder.Configuration["AI:OpenAI:ApiKey"]);

builder.Services.AddKernel()

.AddOpenAIChatCompletion("gpt-4o", builder.Configuration["AI:OpenAI:ApiKey"], null, null, new HttpClient(new RequestAndResponseLoggingHttpClientHandler()))

.AddOpenAIEmbeddingGenerator("text-embedding-ada-002", builder.Configuration["AI:OpenAI:ApiKey"], null, null, new HttpClient(new RequestLoggingHttpClientHandler()));

var app = builder.Build();

// Step 2: Text Chunking

var code = File.ReadAllLines(@"transcript.txt");

var tokenizer = TiktokenTokenizer.CreateForModel("gpt-4o");

var chunks = TextChunker.SplitPlainTextParagraphs([.. code], 500, 100, null, text => tokenizer.CountTokens(text));

// Step 3: Vector Store

var embeddingGenerator = app.Services.GetRequiredService<IEmbeddingGenerator<string, Embedding<float>>>();

var vectorStore = new InMemoryVectorStore(new() { EmbeddingGenerator = embeddingGenerator });

using var textSearchStore = new TextSearchStore<string>(vectorStore, collectionName: "chunks", vectorDimensions: 1536);

await textSearchStore.UpsertTextAsync(chunks);

app.MapGet("/copilot", async (string question, Kernel kernel) =>

{

// Step 4: Search the Vector Store

var results = await textSearchStore.SearchAsync(question, new TextSearchOptions() { Top = 10 });

var context = new StringBuilder();

int tokensRemaining = 2000;

await foreach (var result in results.Results)

{

//-----------------------------------------------------------------------------------------------------------------------------

// Keep Prompt under specific size

if ((tokensRemaining -= tokenizer.CountTokens(result)) < 0)

break;

//-----------------------------------------------------------------------------------------------------------------------------

System.Console.WriteLine(result);

System.Console.WriteLine("");

context.AppendLine(result);

}

var prompts = kernel.CreatePluginFromPromptDirectory("Prompts");

return prompts["RAG"].InvokeStreamingAsync<string>(kernel, new KernelArguments()

{

{ "question", question },

{ "context", context.ToString() }

});

});

app.Run();

References and other learning materials

Here are some Semantic Kernel references that you may find useful:

- My Semantic Kernel Hello World blogs

- Semantic Kernel Discord

- Semantic Kernel Office Hours Videos

- Semantic Kernel Documentation

- Semantic Kernel Github

If you have a comment, please message me @haleyjason on twitter/X.